Azure Data Factory: 7 Powerful Features You Must Know

If you’re dealing with data in the cloud, Azure Data Factory isn’t just another tool—it’s your ultimate game-changer. This powerful ETL service simplifies how you ingest, transform, and move data across diverse sources, all without writing a single line of code. Let’s dive into what makes it indispensable.

What Is Azure Data Factory?

Azure Data Factory (ADF) is Microsoft’s cloud-based data integration service that enables organizations to create data-driven workflows for orchestrating and automating data movement and transformation. It’s a core component of Microsoft Azure’s data platform, designed to handle both batch and real-time data processing from on-premises and cloud environments.

Core Purpose and Vision

The primary goal of Azure Data Factory is to streamline the process of building scalable, reliable, and maintainable data pipelines. Unlike traditional ETL tools that require heavy infrastructure, ADF operates entirely in the cloud, allowing developers and data engineers to focus on logic rather than infrastructure management.

- Enables serverless data integration

- Supports hybrid data scenarios (cloud + on-premises)

- Integrates seamlessly with other Azure services like Azure Synapse, Azure Databricks, and Power BI

According to Microsoft’s official documentation, ADF is “a fully managed cloud service for orchestrating and automating data movement and data transformation.” You can learn more at Microsoft Learn: Azure Data Factory Overview.

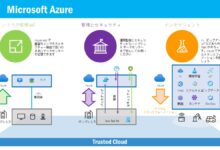

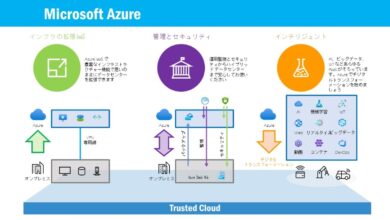

How It Fits Into Modern Data Architecture

In today’s data-driven world, companies collect information from countless sources—CRM systems, IoT devices, social media, and legacy databases. Azure Data Factory acts as the central nervous system that connects these silos, transforming raw data into actionable insights.

“Azure Data Factory allows enterprises to build complex data workflows without managing any infrastructure—making it a cornerstone of modern cloud data platforms.”

It supports ELT (Extract, Load, Transform) patterns, aligning well with modern data warehouse architectures like data lakes and lakehouses. By integrating with Azure Data Lake Storage and Azure Synapse Analytics, ADF enables scalable analytics at enterprise levels.

Key Components of Azure Data Factory

To understand how Azure Data Factory works, you need to know its building blocks. Each component plays a critical role in designing, executing, and monitoring data pipelines.

Pipelines and Activities

A pipeline in Azure Data Factory is a logical grouping of activities that perform a specific task. For example, a pipeline might extract sales data from an on-premises SQL Server, transform it using Azure Databricks, and load it into Azure Synapse for reporting.

- Copy Activity: Moves data from source to destination with high throughput and built-in connectivity.

- Data Flow Activity: Enables visual data transformation using a no-code interface powered by Spark.

- Control Activities: Orchestrate pipeline execution (e.g., If Condition, ForEach, Execute Pipeline).

These activities are orchestrated through a JSON-based definition, which can be managed via the Azure portal, PowerShell, SDKs, or CI/CD pipelines.

Linked Services and Datasets

Linked services define the connection information needed to connect to external resources. Think of them as connection strings with additional metadata. For example, a linked service might store credentials for connecting to an Azure Blob Storage account or an on-premises Oracle database.

- Securely store authentication details

- Support a wide range of data stores including SaaS apps like Salesforce

- Enable reuse across multiple pipelines

Datasets, on the other hand, represent the structure and location of data within a linked service. They don’t contain the actual data but describe how to reference it—like a table in SQL Server or a folder in Azure Data Lake.

Integration Runtime (IR)

The Integration Runtime is the backbone of connectivity in Azure Data Factory. It’s a compute infrastructure that provides the following capabilities:

- Azure IR: For cloud-to-cloud data movement.

- Self-hosted IR: Enables secure communication between cloud ADF and on-premises data sources.

- Managed Virtual Network IR: For secure, private data movement within a virtual network.

Without IR, ADF wouldn’t be able to access internal databases or legacy systems behind firewalls. Setting up a self-hosted IR involves installing a lightweight agent on an on-premises machine, which then communicates securely with the cloud service.

Why Choose Azure Data Factory Over Alternatives?

With so many data integration tools available—like Informatica, Talend, AWS Glue, and Google Cloud Dataflow—why should you consider Azure Data Factory? The answer lies in its seamless integration, scalability, and cloud-native design.

Seamless Integration with the Microsoft Ecosystem

If your organization already uses Microsoft products like Office 365, Dynamics 365, or SQL Server, Azure Data Factory offers native connectors and optimized performance. It integrates effortlessly with Azure Active Directory for authentication, Azure Key Vault for secret management, and Azure Monitor for logging and alerting.

- Pre-built connectors for over 100 data sources

- Native support for Azure services like Event Hubs, Cosmos DB, and Logic Apps

- Direct integration with Power BI for real-time dashboards

This ecosystem synergy reduces development time and increases reliability, especially in hybrid environments.

Serverless and Scalable Architecture

One of the most compelling advantages of azure data factory is its serverless nature. You don’t need to provision or manage any virtual machines or clusters. When a pipeline runs, ADF automatically scales compute resources based on workload demands.

- No infrastructure to maintain

- Pay-per-execution pricing model

- Automatic scaling during peak loads

This makes it ideal for businesses with fluctuating data volumes, such as retail companies during holiday seasons or financial institutions processing end-of-day reports.

Visual Development and Code-Free Transformation

Azure Data Factory provides a drag-and-drop interface called the Data Factory UX (user experience), where you can build pipelines visually. This lowers the barrier to entry for non-developers and accelerates development cycles.

- Drag-and-drop pipeline designer

- Visual data flow editor with transformation templates

- Real-time debugging and monitoring

Even complex transformations can be built without writing code, thanks to the visual data flow feature powered by Apache Spark. However, for advanced users, ADF also supports custom code via notebooks in Azure Databricks or Azure Synapse.

Building Your First Pipeline in Azure Data Factory

Now that we’ve covered the theory, let’s walk through creating a simple ETL pipeline. This example will extract data from Azure Blob Storage, transform it, and load it into Azure SQL Database.

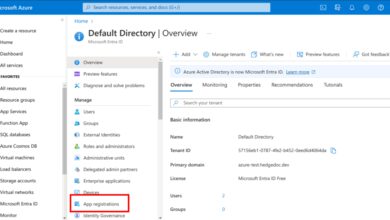

Step 1: Create a Data Factory Instance

Log in to the Azure Portal, navigate to “Create a resource,” search for “Data Factory,” and select it. Fill in the required details:

- Name: Unique name (e.g., myDataFactory01)

- Subscription: Your Azure subscription

- Resource Group: Create new or use existing

- Region: Choose closest geographic region

- Version: Select V2 (current)

After deployment, open the Data Factory studio to begin building pipelines.

Step 2: Set Up Linked Services

Before moving data, you need to connect to your sources and destinations. In the ADF studio, go to the “Manage” tab and create two linked services:

- Azure Blob Storage: Provide the storage account name and key.

- Azure SQL Database: Enter server name, database name, and authentication method (SQL or AAD).

Test the connections to ensure they work before proceeding.

Step 3: Define Datasets and Build the Pipeline

Next, define datasets for the source (Blob file) and sink (SQL table). Then, create a new pipeline and add a Copy Data activity.

- Set the source dataset to your Blob file (e.g., CSV or JSON)

- Set the sink dataset to your SQL table

- Map columns if needed

- Add a trigger to run the pipeline on schedule or manually

Once configured, publish the changes and trigger a run. Monitor the execution in the “Monitor” tab to verify success.

Advanced Capabilities: Data Flows and SSIS Integration

While basic copy operations are useful, Azure Data Factory shines when handling complex transformations and legacy workloads.

Visual Data Flows for No-Code Transformation

Data Flows allow you to perform ETL operations using a visual interface. Under the hood, they use Azure Databricks clusters to execute transformations in a serverless Spark environment.

- Supports data cleansing, aggregation, joins, and pivoting

- Includes built-in streaming for real-time processing

- Generates optimized Spark code automatically

You can debug data flows interactively, preview data at each step, and optimize performance using partitioning and caching strategies.

Migrating SSIS Packages to the Cloud

Many enterprises still rely on SQL Server Integration Services (SSIS) for mission-critical ETL jobs. Azure Data Factory supports SSIS migration through the Azure-SSIS Integration Runtime.

- Lift-and-shift existing SSIS packages to the cloud

- Run SSIS in a fully managed environment

- Scale out execution across multiple nodes

This feature bridges the gap between legacy systems and modern cloud architectures, enabling gradual digital transformation without rewriting existing logic.

Monitoring, Security, and Governance in Azure Data Factory

Enterprise-grade data pipelines require robust monitoring, security, and compliance controls. Azure Data Factory delivers on all fronts.

Real-Time Monitoring and Alerting

The “Monitor” section in ADF provides end-to-end visibility into pipeline runs, including duration, status, and error details. You can drill down into individual activity runs and view input/output parameters.

- Track failed runs and retry automatically

- Set up email or webhook alerts via Azure Monitor

- Export logs to Log Analytics for long-term analysis

Integration with Azure Application Insights allows for custom telemetry and performance tracking.

Role-Based Access Control and Data Protection

Security is paramount when dealing with sensitive data. Azure Data Factory supports Azure Role-Based Access Control (RBAC), allowing fine-grained permissions.

- Assign roles like Contributor, Reader, or Data Factory Contributor

- Integrate with Azure Key Vault to secure credentials

- Enable private endpoints to block public internet access

All data in transit is encrypted using TLS, and at rest using Azure Storage encryption. For regulated industries, ADF complies with standards like GDPR, HIPAA, and ISO 27001.

Audit Logs and Compliance Reporting

For governance, ADF integrates with Azure Policy and Azure Blueprints to enforce organizational standards. Audit logs capture every change made to pipelines, datasets, and triggers.

- Track who changed what and when

- Generate compliance reports for auditors

- Integrate with SIEM tools like Microsoft Sentinel

This ensures transparency and accountability across data operations.

Best Practices for Optimizing Azure Data Factory Performance

To get the most out of azure data factory, follow these proven best practices.

Optimize Copy Activity Settings

The Copy Activity is often the bottleneck in data pipelines. Tune it for performance by adjusting the following settings:

- Use binary copy for unchanged data transfer

- Enable compression to reduce network overhead

- Adjust parallel copies and buffer settings based on source/destination capacity

For large datasets, consider using staged copy via Azure Blob Storage to improve throughput.

Use Parameterization and Templates

Make your pipelines reusable by parameterizing linked services, datasets, and pipelines. For example, use parameters for file names, folder paths, or database names.

- Create template pipelines for common patterns

- Pass parameters dynamically from triggers or external systems

- Use global parameters for constants like environment names

This approach enhances maintainability and supports DevOps practices.

Implement CI/CD Pipelines

Just like application code, data pipelines should be version-controlled and deployed via CI/CD. Use Azure DevOps or GitHub Actions to automate deployment across environments (dev, test, prod).

- Store ADF JSON definitions in Git repositories

- Use ARM templates or ADF’s built-in publishing mechanism

- Validate changes before promotion

This reduces human error and ensures consistency across deployments.

Real-World Use Cases of Azure Data Factory

Understanding theoretical concepts is important, but seeing how azure data factory is used in real scenarios brings clarity.

Healthcare: Integrating Patient Data Across Systems

A large hospital network uses ADF to consolidate patient records from electronic health record (EHR) systems, lab results, and wearable devices into a centralized data lake. This enables real-time analytics for patient care and predictive modeling for disease outbreaks.

- ETL from HL7 and FHIR APIs

- Secure handling of PHI (Protected Health Information)

- Integration with Azure AI for diagnostic insights

The solution improves response times and reduces administrative overhead.

Retail: Unified Customer View for Personalization

An e-commerce company leverages ADF to combine online behavior, purchase history, and CRM data into a 360-degree customer profile. This unified view powers personalized recommendations and targeted marketing campaigns.

- Ingest clickstream data from web and mobile apps

- Enrich with demographic data from third-party sources

- Feed into Azure Machine Learning for churn prediction

As a result, conversion rates increased by 22% within six months.

Finance: Regulatory Reporting and Risk Analysis

A global bank uses ADF to automate daily risk reporting by pulling transaction data from core banking systems, applying fraud detection rules, and generating regulatory filings.

- Process terabytes of data nightly

- Ensure auditability and traceability

- Integrate with Power BI for executive dashboards

The automation reduced manual effort by 70% and improved report accuracy.

What is Azure Data Factory used for?

Azure Data Factory is used to create, schedule, and manage data pipelines that move and transform data across cloud and on-premises sources. It’s commonly used for ETL/ELT processes, data warehousing, real-time analytics, and integrating disparate data systems.

Is Azure Data Factory free to use?

No, Azure Data Factory is not free, but it offers a free tier with limited usage. Pricing is based on pipeline runs, data movement, and data flow execution. You only pay for what you use, making it cost-effective for variable workloads.

How does Azure Data Factory differ from Azure Synapse?

Azure Data Factory focuses on data integration and orchestration, while Azure Synapse combines data integration, data warehousing, and big data analytics. ADF can be used within Synapse as its integration engine, but Synapse provides deeper analytics capabilities.

Can Azure Data Factory run SSIS packages?

Yes, Azure Data Factory supports running SSIS packages through the Azure-SSIS Integration Runtime. This allows organizations to migrate their existing SSIS workloads to the cloud without rewriting them.

Does Azure Data Factory support real-time data processing?

Yes, Azure Data Factory supports event-driven and streaming data processing using triggers and data flows. It can respond to events like file uploads or message queues and process data in near real-time.

Azure Data Factory is more than just a data movement tool—it’s a comprehensive platform for building intelligent, scalable, and secure data pipelines in the cloud. From simple ETL jobs to complex hybrid integrations, it empowers organizations to unlock the full potential of their data. Whether you’re migrating legacy systems, building a modern data lake, or automating business intelligence workflows, ADF provides the tools and flexibility you need. By leveraging its visual interface, serverless architecture, and deep Azure integration, you can accelerate your data journey and drive better business outcomes.

Recommended for you 👇

Further Reading: